By integrating AI with autonomous systems, Amazon’s deployment of 520,000 AI-driven warehouse robots is revolutionizing its logistics. This strategy cuts fulfillment costs by 20% and increases hourly throughput by 40%, turning isolated analytics into self-optimizing, machine-speed action loops.

This guide provides a technical blueprint for implementing goal-directed autonomous systems, breaking down the design choices, platforms, and safeguards needed to move from proof of concept to production without losing control.

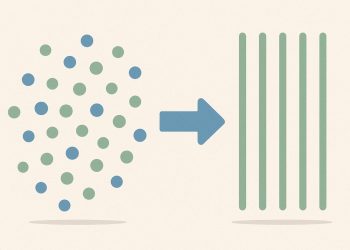

Core Architecture: Sense, Plan, Act

The ‘Sense, Plan, Act’ model forms the core of autonomous systems. Sensors gather data from the environment, AI planners evaluate options based on goals and constraints, and the execution layer issues commands to robots or software agents, creating a continuous, self-optimizing operational loop.

Every goal-directed autonomous system operates on this closed loop. While sensors and APIs stream raw event data and planners rank options, effective data integration remains the primary bottleneck. Market leader Informatica still powers 40% of enterprise data integration by using AI to clean, tag, and route data with robust governance. Pairing this data backbone with a modern planning engine like Azure ML or Anthropic Claude achieves millisecond state synchronization and decision latency under 200 ms in typical retail pilots.

Latency requirements dictate the orchestration style. For sub-second physical control, lightweight planners should be embedded on edge devices, with more complex policy checks handled by the cloud. For back-office tasks like content remediation, batch planning is sufficient, provided the state remains atomic and idempotent.

Choosing the Right Integration Stack

A successful integration stack should be structured around the sense-plan-act framework:

- Data connectors: Airbyte for open-source flexibility or Fivetran for self-healing ETL

- Orchestration engine: Domo Workflows for low-code business logic

- Agent layer: Instalily composable agents for tasks spanning multiple systems

- Observability: Sisense dashboards with trace IDs linking back to raw sensor events

Treat APIs as immutable product contracts. Enforce versioning, linting, and gate breaking changes with feature flags. A canary deployment, which directs a small percentage of traffic to new pipelines, is essential for collecting latency and error metrics. For instance, Microsoft’s logistics simulations found that canary deployments detected 24 percent planning drift before a full rollout, preventing significant operational issues.

Safety, Testing, and Human Oversight

Autonomy introduces new attack surfaces that demand robust protection. Experts recommend a dual approach: physical interlocks like light curtains combined with digital AI firewalls that halt any command violating established policy. With autonomous agents predicted to outnumber humans 82 to 1, threats like identity spoofing via deepfakes are a top concern. Mandate signed commands and rotate machine credentials hourly to mitigate these risks.

Simulation provides the most cost-effective safety net. Digital twins of facilities or content networks allow you to fuzz test edge cases, inject packet loss, and verify graceful degradation under stress. Furthermore, CoTester agents can automate UI test creation and healing, ensuring regression coverage keeps pace with rapid release cycles.

To ensure compliance, auditability is non-negotiable. Log the complete decision tree for each automated action and stream hashes to an immutable ledger. Enterprises using such tamper-evident logs have reduced forensic investigation times from days to minutes.

Scaling to Multi-Domain Use Cases

Once the core autonomous loop is proven and trusted, it can be scaled across new domains with remarkable speed. Unilever integrates demand-sensing AI into its supply planning, using local event and weather forecasts to achieve on-shelf availability above 95 percent. In media, policy engines automatically route user-generated content through AI detectors and human review queues, enabling flagged clips to be published in under two minutes. Logistics leaders like DHL have saved 10 million delivery miles annually by combining predictive dispatch with smart trucks.

These patterns – stable data contracts, policy-aware planners, and observable execution – are universally applicable. Mastering them transforms goal-directed autonomy from a one-off science project into a repeatable, high-impact enterprise capability.

How did Amazon achieve a 20% reduction in fulfillment costs with 520,000 AI robots?

By pairing sense-plan-act architecture with warehouse-wide orchestration, Amazon’s robot fleet now cuts fulfillment costs by 20% while processing 40% more orders per hour. Computer-vision picking reaches 99.8% accuracy, and every robot movement is logged for audit and rollback. The keys are low-latency state synchronization between robots and WMS, real-time error-handling policies, and canary deployments that test new behavior on 1% of the fleet before global rollout.

Which safety systems prevent an autonomous robot from harming workers?

Amazon uses light-curtain emergency stops, collision sensors, and fail-safe software that halts any robot if a human crosses a defined perimeter. A human-over-ride pendant can freeze the entire zone in 200ms, while policy engines enforce speed limits and force thresholds. Continuous audit logs and digital-twin replay let engineers trace every near-miss and update rules without touching production code.

How do you keep 520,000 robots from drifting out of sync?

The platform relies on tight API contracts (version-locked protobuf schemas) and millisecond-level state sync via an event mesh. Robots publish pose, battery, and task status every 100ms; the orchestrator publishes global constraints (blocked aisles, new high-priority orders) with guaranteed delivery. If latency >300ms or data loss >0.1%, the robot defaults to a safe park position and waits for fresh instructions.

What testing regimen lets Amazon ship new code daily to living robots?

Every software change runs through simulation-first validation: 50k virtual robots replay yesterday’s peak-hour workload in a digital twin of the facility. Only builds that beat baseline throughput +2% and keep error rate <0.05% graduate to a 1% canary on the live floor. If SLOs hold for 30min, rollout expands to 100% inside two hours; otherwise an automatic rollback returns the fleet to the previous image in 90s.

Where else is this “robot-first” playbook being copied?

Outside e-commerce, the same stack now automates content publishing and remediation: bots crawl source systems, rewrite obsolete paragraphs, and re-distribute articles overnight. Microsoft’s hardware supply chain reduced planning time from four days to 30min, and Maersk’s refrigerated fleet uses the model to protect $1bn of temperature-sensitive inventory. Any industry that needs goal-directed, lights-out operations can rent the architecture – only the end-effectors change.