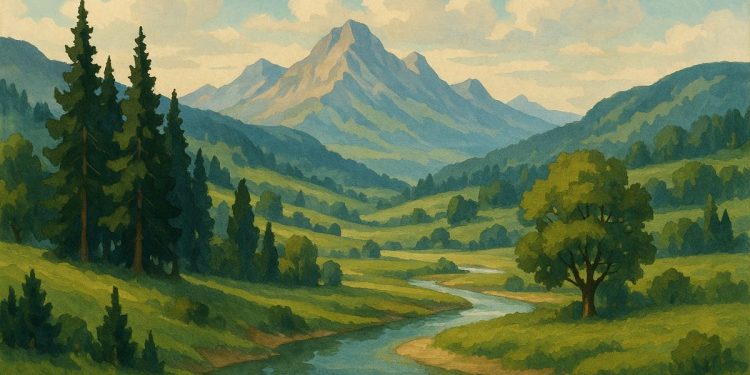

Generative AI models like ChatGPT need a lot of fresh water to keep their computers cool. Huge data centers can use up to 2 million litres of water every day, which adds up to 560 billion litres each year around the world. This heavy water use is a problem in places that already don’t have enough water. Some new technology and rules are trying to help, but every time you use AI, it quietly uses water behind the scenes. When you see AI answers like this, remember there’s a hidden river making it possible.

What is the water footprint of generative AI models?

Generative AI models, like ChatGPT, consume massive amounts of water for cooling data centers. A single data center can use up to 2 million litres of fresh water per day, contributing to a global annual usage of 560 billion litres, impacting regions already facing water scarcity.

Each time you ask ChatGPT a question, a hidden river runs.

Behind the friendly text sits a 100-megawatt data center that can swallow 2 million litres of fresh water in 24 hours, enough for 6,500 American households. Multiply that by the 560 billion litres the global fleet drinks every year and you have 224,000 Olympic pools that never see a swimmer.

Why AI is so thirsty

Unlike the laptops on our desks, the racks that train or serve large language models dump enormous heat. To keep GPUs and TPUs from melting, operators spray, chill and evaporate water inside cooling towers. A single kilowatt-hour of compute typically needs two litres of water for cooling; generative workloads are pushing this ratio even higher.

Hot spots under water stress

Two-thirds of the new capacity built or planned since 2022 sits in regions that already ration water.

| Location | Share of local water taken by one operator (2022) | Source |

|---|---|---|

| The Dalles, Oregon | 25 % | Food & Water Watch 2025 |

| Mesa, Arizona | 13 % | utility filings |

| Loudoun County, Virginia | 18 % | county estimates |

Because most centres return only 20 % of the water they withdraw (the rest vanishes as vapor), every new server row tightens the tap for farmers and residents downstream.

What is being done?

- Better tech: Google’s latest TPU pods use direct-to-chip liquid loops that cut water loss and quadruple compute density. Microsoft now designs all new halls with zero-evaporation cooling, aiming to eliminate fresh-water draw by 2027.

- Policy gaps: The EU* * already forces every 500 kW-plus facility to publish annual water figures; the US* * still relies on voluntary reports from local utilities.

- Incentives : A July 2025 White House order unlocked federal grants and loan guarantees for data centers that pair renewable power with closed-loop or waterless cooling, hoping to steer future builds away from drought zones.

Until these fixes scale, each prompt carries an invisible litre count. The next time an AI summary flickers onto your screen, remember the quiet river that paid for it.

FAQ: The Hidden Water Bill of AI

Q1. How much water does a typical AI data center actually use?

A mid-size 100-megawatt facility swallows roughly 2 million litres of water every single day, the same amount that 6,500 American households consume. When you zoom out, global data centers drink about 560 billion litres annually, enough to fill 224,000 Olympic swimming pools. Two-thirds of the newest sites are being built in regions already suffering water stress, so every litre matters.

Q2. Why do generative models like ChatGPT have a water footprint at all?

They run on specialised hardware inside vast data centres. These processors get hot – fast. Cooling towers keep them from overheating, but the cheapest and most common method is to evaporate treated water. In practice, only about 20 % of the withdrawn water is returned to sewage treatment; the rest evaporates and is gone for good.

Q3. Which regions are most affected?

Look at The Dalles, Oregon: Google’s data centres now gulp 25 % of the city’s entire water supply, triple their 2017 figure. Across the United States, AI and cloud centres may soon demand up to 720 billion gallons (≈2.7 trillion litres) per year, equal to the indoor needs of 18.5 million households. Globally, the Great Lakes, Arizona and parts of the Middle East are earmarked for expansion despite already tight supplies.

Q4. Are there any new technologies that could cut this usage?

Yes. Hyperscalers are rolling out direct-to-chip and immersion liquid cooling, which can slash water and energy needs. Microsoft will make zero-water cooling the default for every new design in 2025, while Google’s liquid-cooled TPU pods have quadrupled compute density without extra evaporation. Two-phase systems that switch coolant between liquid and vapour are moving from pilot to mainstream in 2025.

Q5. What regulations exist to control AI water use?

Europe leads: every data centre ≥500 kW must file public, annual water-use reports under the Energy Efficiency Directive EU/2023/1791. In the United States, oversight is local and fragmented – no federal mandate exists yet, and fewer than a third of operators even track their water consumption. Recent federal and state incentives (loans, tax breaks, fast-track permits) are encouraging greener builds, but they remain voluntary rather than compulsory.