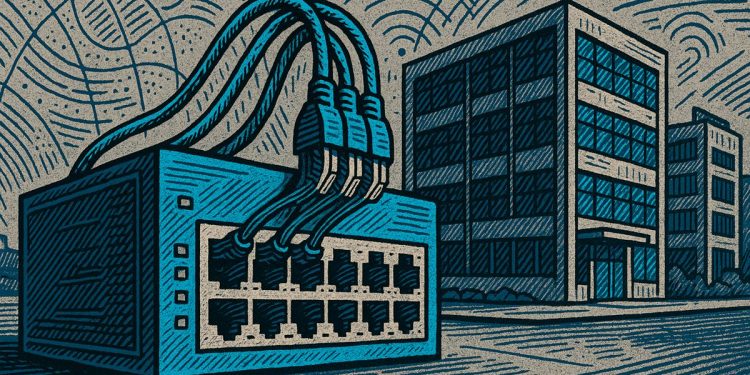

AI-ready networks are built to handle the big demands of artificial intelligence, with super-fast speeds, low delays, and strong security. Many companies want to use AI, but most of their networks aren’t ready yet, creating a big gap between what they want and what they can do. Upgrading means adding powerful hardware, smarter monitoring, and better defenses against cyber threats. These changes can be expensive and require new skills, but the payoff is fewer network problems and smoother AI performance.

What makes a network AI-ready and why is it important for enterprises?

An AI-ready enterprise networks requirements and adoption supports high bandwidth, low latency, and robust security to handle the demands of AI workloads. Key features include 400 GbE fabrics, RoCE v2, GPU-direct switches, and AI-driven monitoring, ensuring reliability, scalability, and reduced outages for enterprise AI deployments.

Enterprise networks built for email and video are being pushed to their limits by AI assistants, agents, and model-training clusters. A recent Aryaka survey found only 31 percent of organizations believe their current WAN can handle AI traffic, yet 78 percent already run generative-AI pilots somewhere in the business. The gap between ambition and readiness is reshaping budgets and blueprints.

Bandwidth and latency are the first bottlenecks. Training a single large language model can move terabytes per day across East-West data-center links; inference at the edge adds thousands of many-to-many micro-flows that traditional top-down architectures never anticipated. Verizon’s response has been to overlay an AI-driven control plane that spots incipient outages 90 seconds faster than human monitoring and reroutes traffic before users notice.

Security obligations are also expanding. As models move closer to users via edge nodes, the potential attack surface stretches from core switches to Wi-Fi access points. Toyota North America saw incident-resolution time drop 80 percent after layering AI-based anomaly detection on its factory network, but only after hardening every edge gateway with zero-trust micro-segmentation.

Physical upgrades follow a predictable checklist: 400 GbE leaf-spine fabrics, RoCE v2 for lossless transport, and GPU-direct switches that cut server-to-server latency below five microseconds. The Ultra Ethernet Consortium, formed in 2023, is finalizing standards that push raw throughput toward 1.6 Tb/s while keeping power per bit almost flat.

Edge computing is the counterweight to all that central horsepower. Celebration Church in Florida cut Wi-Fi complaints by 60 percent after deploying Wyebot sensors that use reinforcement learning to pick the cleanest channels every minute; doing the same job from the cloud would have saturated the venue’s 2 Gbps uplink during Sunday services.

Cost remains the steepest hurdle. Optical transceivers rated for AI workloads cost 3–4 times legacy parts, and retrofitting an average enterprise data center runs into seven figures. Yet Equinix reports that customers who adopted AI-ready fabrics in 2024 saw network-related outages fall by half within the first six months, an ROI story that is accelerating board-level approvals.

The talent gap is just as real. Fewer than one in four IT teams claim deep expertise in AI-ready networking, prompting many to lean on managed services or pre-validated reference designs. The coming year will likely separate the firms that treat AI networking as a one-time upgrade from those that bake continuous optimization into their operational cadence.