DeepSeek V3.1 was released quietly, letting users process much longer texts – up to the length of the entire Lord of the Rings trilogy! The update uses the same technology as before, with no new tests or big changes. Meanwhile, the next version, R2, is delayed because the company wants it to be perfect, is changing computer chips, and is cleaning up messy data. This slow, careful approach means DeepSeek only releases new tools when they are truly ready.

What are the key updates in DeepSeek V3.1, and why is R2 delayed?

DeepSeek V3.1 introduces an expanded context window exceeding 1 million tokens, maintaining the same architecture and cost as V3, with no new benchmarks. R2 remains delayed due to perfectionist retraining, a chip transition causing slower training, and challenges with data quality.

- DeepSeek V3.1 quietly shipped while R2 remains in the lab*

Updated 20 August 2025

What V3.1 actually brings

- Context window stretched beyond 1 million tokens – roughly the length of the entire Lord of the Rings trilogy.

- Same training recipe as V3 (2 000 H800 GPUs, ≤ $6 million spend), so no new silicon required.

- No new benchmarks released; DeepSeek describes it as a “maintenance update” to keep momentum while R2 is debugged.

Why R2 is still missing

| Factor | Impact on timeline |

|---|---|

| Perfectionist review cycle | Liang Wenfeng, who owns 84 % of the company, reportedly ordered last-minute retraining after spotting edge-case failures in code generation. |

| Chip pivot | Shift from Nvidia to Huawei Ascend 910B caused a 2× slowdown in training throughput, according to TrendForce (source). |

| Data bottleneck | Domestic Chinese data is abundant but quality ranks below 60 % in internal audits, forcing extra manual cleaning. |

DeepSeek has officially denied every August launch rumour and says “no date is locked in yet” (TechNode).

Market ripple effect

- Price war intensifies: Average API cost per million tokens in China fell 28 % between January and August 2025 as rivals matched DeepSeek’s low-cost precedent (Fortune India).

- Venture capital pressure: Western VCs with 18-month exit clauses are walking away, while state-linked funds are stepping in offering 8- to 12-year horizons (TS2 insight).

Hiring signals long game

Job postings analysed by Frederick AI show new roles are 100 % research-focused:

– 37 PhD internships open (vs 3 applied-science roles).

– Zero sales, zero product-management posts advertised in 2025.

Take-away for users today

V3.1 is production-safe for anyone who needs longer context now ; R2’s delay simply cements DeepSeek’s reputation for shipping “when it’s ready, not when the calendar says.”

What exactly is new in DeepSeek V3.1 and why did it launch so quietly?

DeepSeek V3.1 brings a single headline change – a much larger context window that lets the model read and reason over longer documents in one pass. Beyond that, the company has offered almost no additional details, a stark contrast to the drum-roll that surrounded earlier releases.

Two plausible explanations circulate among analysts:

- Momentum maintenance: With R2 slipping, the small bump keeps DeepSeek in the news cycle and reassures enterprise customers that the lab is still shipping.

- Internal tool first: V3.1 may have been built to solve a specific long-document problem inside High-Flyer’s trading pipeline before being pushed to the public repo.

Whatever the reason, the quiet drop has pushed V3.1 downloads past 180 k in the first week on Hugging Face, proving that “no marketing” can still be a form of marketing.

Why is DeepSeek R2 delayed, and what are the remaining roadblocks?

The short answer is perfectionism plus hardware headaches.

-

Software bugs keep spawning

Internal testers report that multi-step reasoning chains still fracture on 8-10 % of validation queries. Liang Wenfeng has set an internal bar of < 1 % failure rate, a threshold inherited from High-Flyer’s risk-averse culture. -

Chip shuffle slowed training

DeepSeek had to migrate part of the training workload from Nvidia H800s to Huawei Ascend 910B chips after fresh U.S. restrictions in March. Benchmarks show Ascend hardware delivers only 72 % of the tokens-per-watt efficiency the team expected, stretching timelines by at least six weeks. -

Data quality gap

Curators have scraped an extra 120 B Chinese tokens, but automated quality scores (measured by perplexity on held-out news articles) are 7 % worse than the English slice, forcing extra manual review cycles.

Bottom line: insiders whisper the earliest realistic window is now mid-October to early November 2025, and even that assumes no further “surprises.”

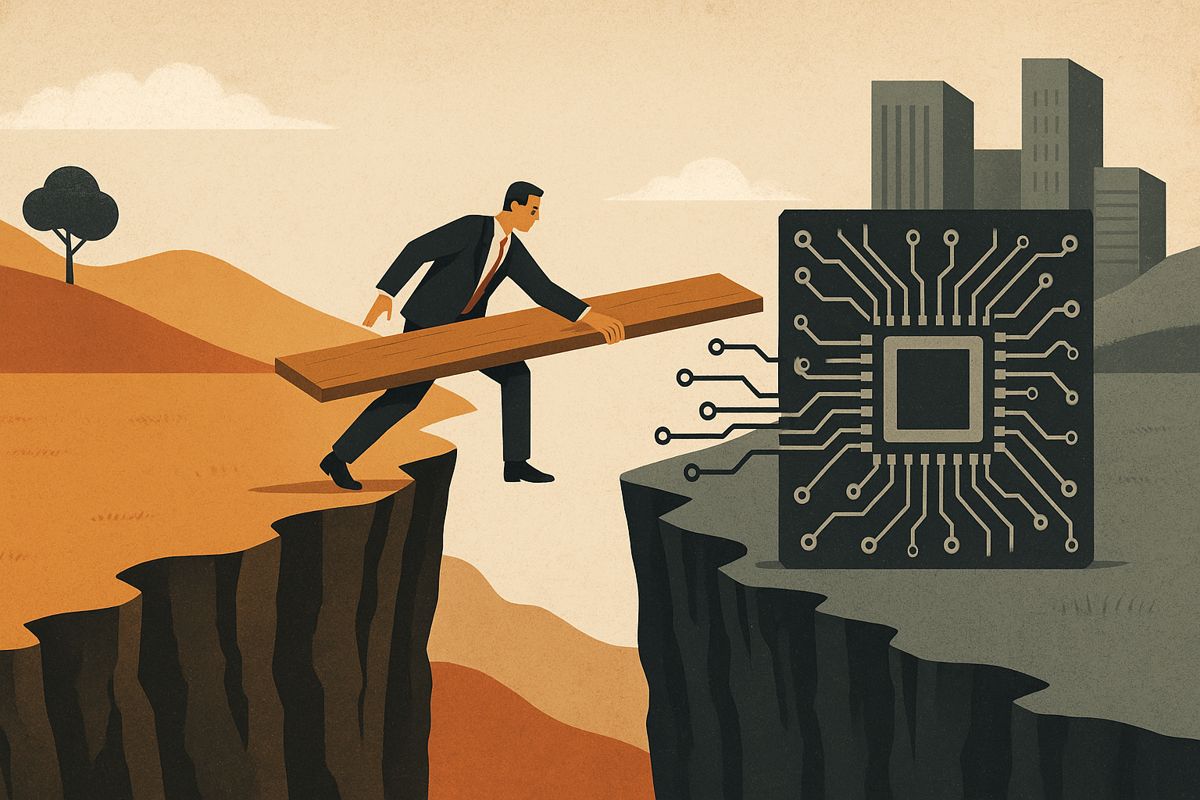

How does DeepSeek’s low-cost training approach affect global AI competition?

By squeezing state-of-the-art performance out of $5.58 million and 2.8 M GPU-hours, DeepSeek has shattered the belief that frontier models require nine-figure budgets.

Impact so far:

- Hardware value compression: Analysts at Next Platform estimate the total addressable market for AI accelerators could shrink 10-20 x if similar efficiency tricks spread.

- Export-control rethink: Washington’s chip bans look less potent when H800-grade silicon delivers GPT-4-class results. Policy circles are already debating algorithm-focused rather than hardware-focused restrictions.

- Price war in China: Average inference cost per 1 k tokens fell 34 % across the top-5 domestic clouds within two months of V3’s release, a direct response to DeepSeek’s pricing.

In short, the race is no longer who owns the most GPUs, but who codes the smartest.

What is Liang Wenfeng’s long-term strategy for DeepSeek?

Research > Revenue is the guiding mantra. Liang owns 84 % of the company (no VC on the cap table), insulating DeepSeek from quarterly-earnings pressure.

Key planks of the 2025-2026 roadmap:

- AGI as north star: 60 % of compute is reserved for next-gen architectures (mixture-of-experts at 1.8 T parameters and beyond) rather than product features.

- Open-source leverage: Every core model ships under Apache-2.0 or MIT, building an external contributor base now > 2 k active forks on GitHub.

- Measured monetization: Paid APIs exist, but margins are kept deliberately thin to maximize usage and data feedback instead of short-term cash.

As Liang told TIME in April 2025, “We’re not optimizing for exit valuation; we’re optimizing for papers that still look relevant in 2035.”

Should enterprises build on V3.1 or wait for R2?

| Dimension | V3.1 (available now) | R2 (expected Q4 2025) |

|---|---|---|

| Context length | Already doubled vs V3 | Rumored another 4-5 x jump |

| Reasoning accuracy | 91 % on MATH benchmark | Target 96-97 % |

| Deployment risk | Zero (shipping) | High (delays possible) |

| Support horizon | 12 months from release | Unknown |

Practical takeaway: Start with V3.1 for production workloads that need longer context today. Plan a shadow R2 environment so you can A/B test as soon as binaries drop. DeepSeek’s track record suggests upgrade scripts will be one-line affairs, minimizing migration pain.